Deploying Large Language Models with Ease: Lessons shared at AI in Production 2024

Deploying Large Language Models with Ease: Lessons Shared at AI in Production 2024

Digits attended the AI in Production 2024 Conference held in Asheville, North Carolina and shared our experience deploying Open Source Large Language Models (LLMs).

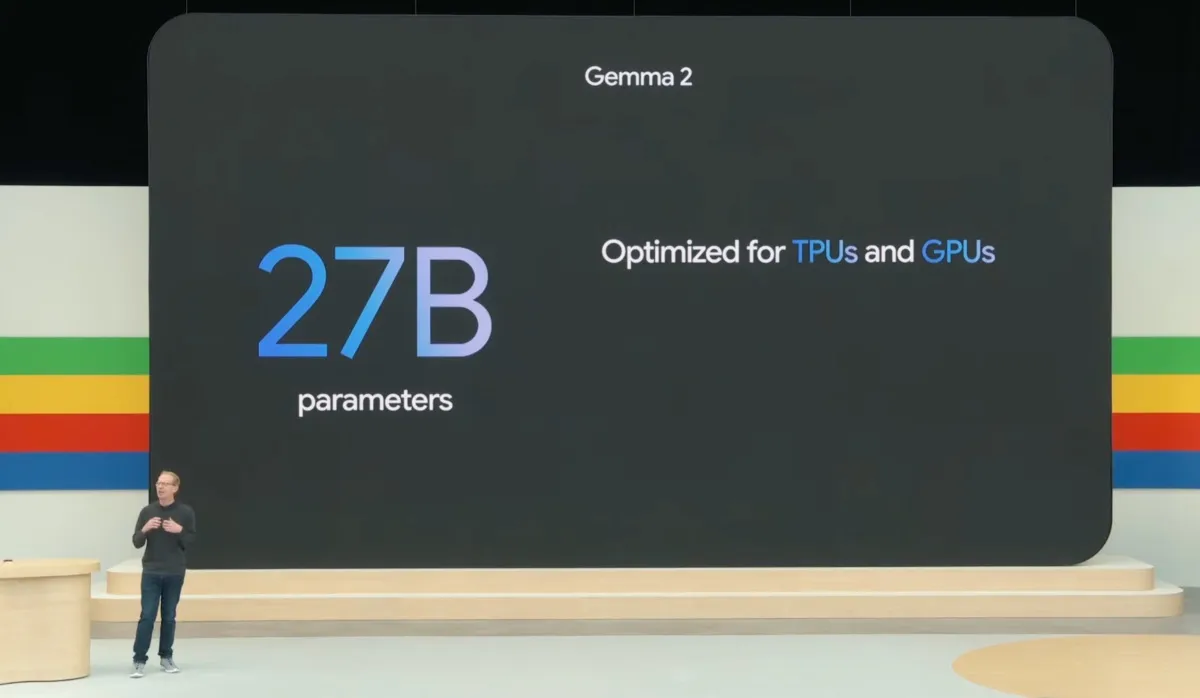

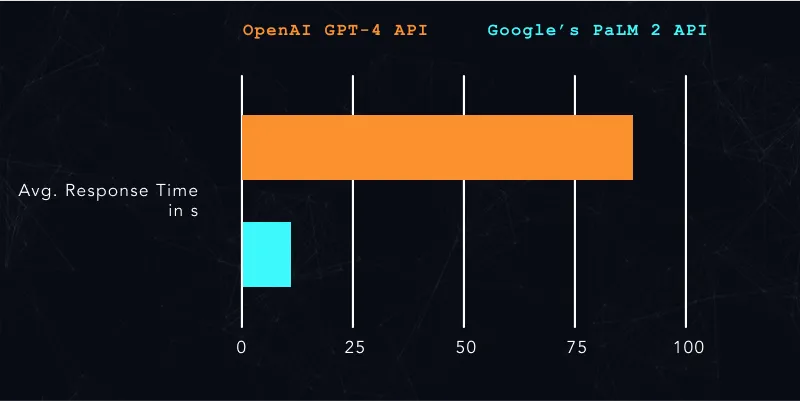

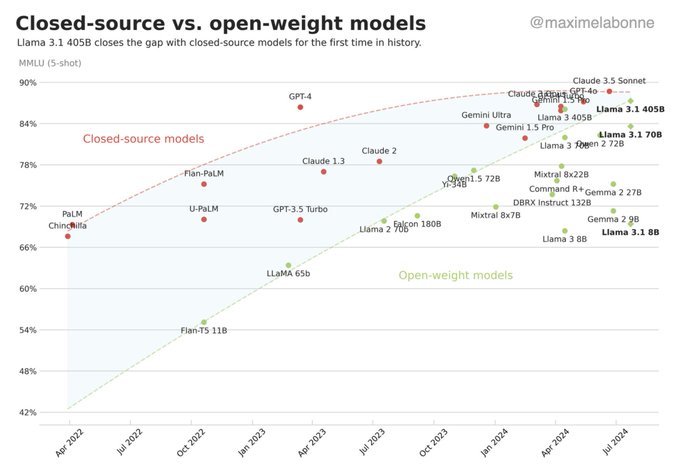

A few days ago, the CEO of Huggingface shared an astounding claim: open-source models are catching up fast to closed-source ones like OpenAI’s GPT-4. This is a major win for the open-source community and shows how AI is becoming more accessible to everyone. But, it also points out an important reality: using these advanced open-source models isn't without its challenges.

In my experience, the accuracy gap between open-source and proprietary is negligible now and open-source is cheaper, faster, more customizable & sustainable for companies! No excuse anymore not to become an AI builder based on open-source AI (vs outsourcing to APIs)!

— Clement Delangue (@ClementDelangue) December 16, 2021

Understanding the Landscape

To set the stage, let's first revisit why deploying open-source LLMs is worthwhile in the first place. The growing proficiency of these models offers undeniable advantages, primarily data privacy and customization.

Why Deploying Open-Source LLMs

- Data Privacy: Using open-source models means that customer data doesn’t have to be shared with third-party services. This self-hosted approach ensures heightened security and compliance with stringent data protection regulations.

- Fine-tuning: Open source models allow for fine-tuning to cater to specific use cases. This customization isn’t typically possible with closed-source models, which often only allow for the usage of pre-trained weights without modifications.

Now that the 'why' behind deploying open-source LLMs is clear, let’s delve into the 'how'. Here's what Digits learned from deploying LLMs, summarized into actionable insights.

Key Lessons from Digits' Deployment Journey

1. Model Selection: Optimize for One, Not Many

In the world of machine learning, it's easy to get swept up in the hype of the latest models. Focus and specialization pay off better in the long run. We found it crucial to select a single model that aligns well with our needs and optimize our infrastructure around it. This focused approach allows for deeper integration and better performance.

2. Tooling and Infrastructure

When we initially embarked on this journey, we assumed that existing hyperscaler Machine Learning platforms would seamlessly support LLM deployments. We couldn’t have been more mistaken. We encountered various issues that significantly hampered our deployment efforts.

Choosing the Right Deployment Tooling: We eventually settled on Titan ML for our deployment needs, finding it to be an excellent combination between deployment flexibility and support by Titan ML.

3. Inference Optimizations

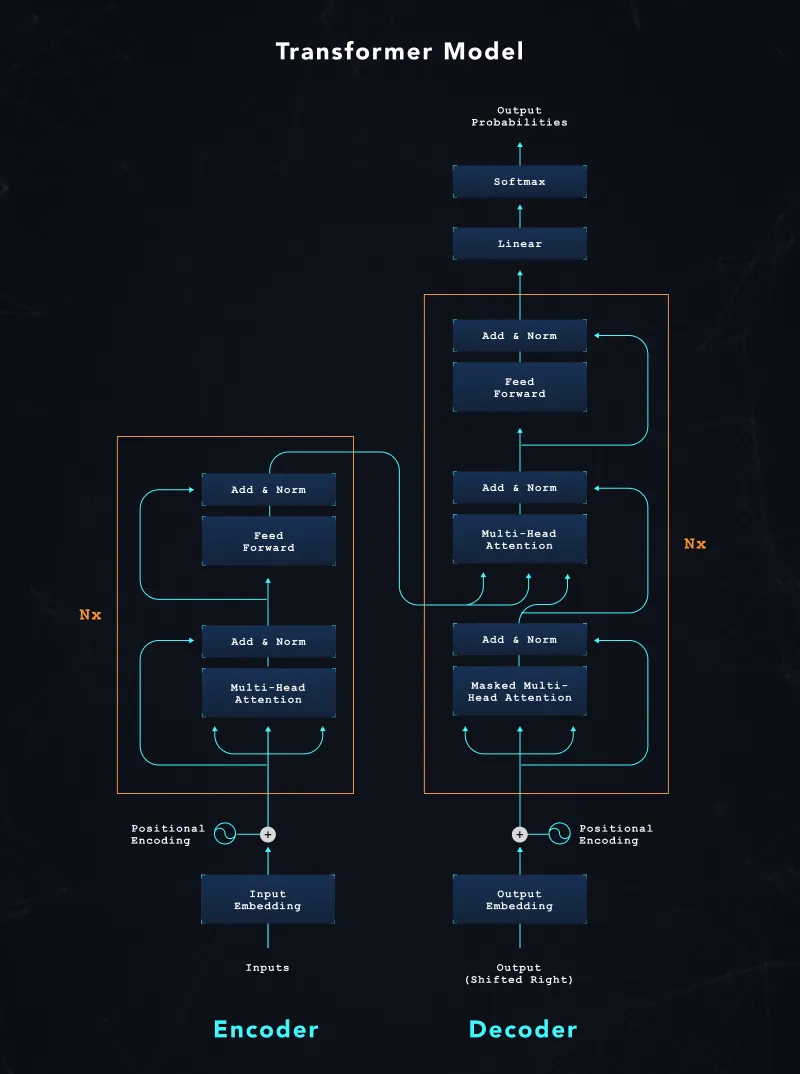

Making LLMs work in a production environment required us to address performance bottlenecks proactively. Here are the techniques that made a substantial difference:

Parallelization: Utilizing multiple GPUs can dramatically speed up inference times but it comes at a cost. Our expenses increased between 2-5x. Therefore, it's essential to evaluate if this trade-off works for your specific use case.

Efficient Attention (KV-Caching): Key-Value Caching can substantially improve inference speeds by storing previous results and reusing them. However, it’s vital to manage the memory usage intricately to avoid any performance degradation.

Quantization: This technique reduces the size and computational requirements of models by decreasing the precision of the numerical weights. We saw up to 90% reduction in inference costs, but it's highly problem-dependent. In some scenarios, the accuracy trade-offs might not be worth the cost savings.

Continuous Batching: This method involves grouping multiple inference requests together, thereby reducing the total number of computational steps. We saw a 2x speedup in inference time and hope for even higher speedups as this technology matures.

Putting It All Together: Our Use Cases

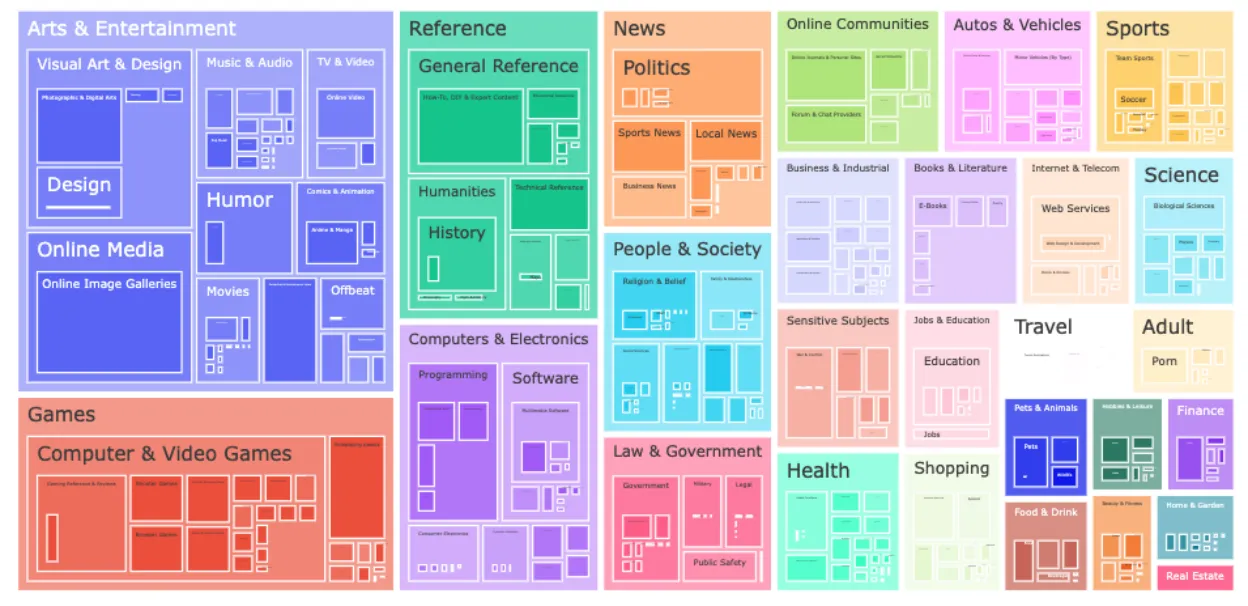

Digits employs Open Source LLMs for various applications, including understanding documents and converting unstructured data into structured data. Each use case brings its own set of challenges and optimization opportunities.

For instance, in document understanding, the quality of the input tokens and the pre-processing steps dramatically affect the accuracy and performance of the model.

Infrastructure Lessons

While the nuances of model selection and inference optimization are critical, don't overlook the mundane aspects of infrastructure, which can make or break the success of your deployment. Make sure you have the right backend systems in place that are resilient and scalable. Equally crucial is to employ monitoring and alerting systems that allow for rapid identification and resolution of any issues.

Conclusion and Next Steps

Deploying Large Language Models in production environments isn’t straightforward yet, but it is becoming increasingly simpler just 18 months ago. Our experience has shown that with the right model, tools, and optimizations, it is possible to deploy these models efficiently.

For those interested in diving deeper into our strategies and lessons learned, we’ve made our full presentation slides available below. They provide a more detailed breakdown of each technique and offer further insights into our deployment journey.

By sharing our experiences, we hope to contribute to the collective wisdom in the ML community, encouraging more organizations to harness the power of open-source LLMs safely and effectively. Happy deploying!

If you haven't already, check out our AI in Production 2024 Conference recap to learn more about the latest trends and insights from the event.